This paper presents an empirical study based on a set of measures to evaluate the usability of mobile applications running on different mobile operating systems, including Android, iOS and Symbian. The aim is to evaluate empirically a framework that we have developed on the use of the Software Quality Standard ISO 9126 in mobile environments, especially the usability characteristic. To do that, 32 users had participated in the experiment and we have used ISO 25062 and ISO 9241 standards for objective measures by working with two widely used mobile applications: Google Apps and Google Maps. The QUIS 7.0 questionnaire have been used to collect measures assessing the users’ level of satisfaction when using these two mobile applications. By analyzing the results we highlighted a set of mobile usability issues that are related to the hardware as well as to the software and that need to be taken into account by designers and developers in order to improve the usability of mobile applications.

In 2012, the average use of smart phones increased by 81 % over 2011 and the average download of mobile applications increased to 342 MB per month and per smartphone, compared to 189 MB in 2011 (Cisco Visual Networking Index 2014) at an international level. The Gartner group study (2014) reports a 2011 yearly 19 % rate of increase in sales of mobile devices. Furthermore, in 2013 nearly 102 billion of mobile applications were downloaded, versus 64 billion in 2012. In 2017, it is expected that this number will increase to 254 billion download (Gartner 2014). These statistics show that smart phones and tablets have invaded the daily lives of consumers: at home, at work, and in public places. Indeed, according to the data provided by Sales Force Marketing Cloud (2014), 85 % of people with smart phones consider their devices an inseparable part of their lives.

Consequently, the growing number of mobile users automatically influences the growth of mobile applications (i.e., apps) that are available in the download platforms, such as the App Store and the Play Store.

In our earlier framework on the use of the software quality standard ISO 9126 in mobile environments, we had identified several mobile limitations that may affect the quality of apps, some of which often have a negative effect on the usability of apps such as smaller screen size, low display resolution, the context in which the mobile device is used and low memory (Idri et al. 2013). Therefore, the evaluation of usability of apps, which must be initiated before the launch of the apps, is considered as a new area of research, (Kjeldskov and Stage 2004). Usability evaluation and remedial actions can help developers to meet the needs of users by designing easy to use apps.

However, few studies have been carried out on the use of ISO 25062 and ISO 9241 to evaluate the software quality of apps and to address the limitations of mobile environments. Most have focused on evaluating the usability of very specific types of apps, such as the Satnav applications (Hussain and Kutar 2009, 2012a), mobile geo-applications (van Elzakker et al. 2008), and mobile tourism applications (Ahmadi and Kong 2008; Geven et al. 2006; Schmiedl et al. 2009; Shrestha 2007; Echtibi et al. 2009).

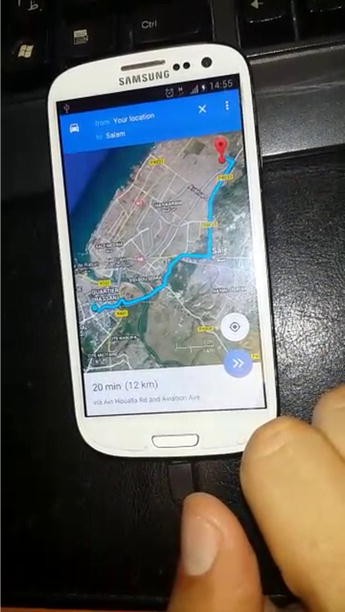

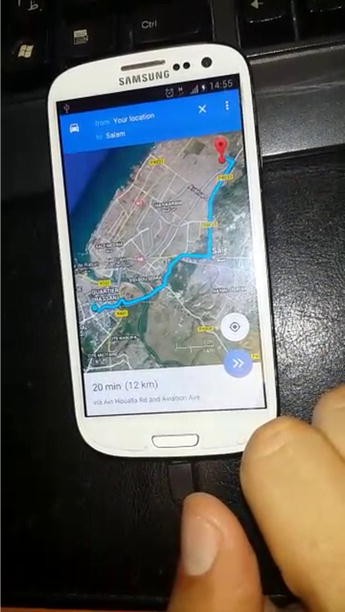

The aim of this study is to present an empirical evaluation of our framework developed on the use of the software quality standard ISO 9126 in mobile environments (Idri et al. 2013), especially the influence of mobile limitations of these environments on the usability of apps running on existing operating systems (OS). To do that, we have relied on the ISO 9241-11:1998, ISO 25062:2006 standards for the usability evaluation. Thirty-two users have participated in the experiment with different types of smart phones (Android, iOS, etc.). They have been asked to perform a set of defined tasks for Google Apps and Google Maps, which were selected as test cases to investigate usability problems. The Questionnaire for User Satisfaction Interaction (QUIS 7.0) (Hussain and Kutar 2012a) was used to assess the user’s satisfaction level.

The paper is structured as follows: “Using ISO 9126 for software quality in mobile environments” section presents the challenges of mobile environments and describes our earlier framework based on ISO 9126 to determine the software quality characteristics that may be influenced by the mobile environments limitations. “Usability evaluation of mobile applications” section defines the usability evaluation of apps according to ISO standards, presents the existing usability evaluation methods, and describes the components of the context of use as proposed by ISO 9241. “Experiment design” section describes the design of the experiment. “Discussion and interpretation” section discusses the results and presents a set of mobile usability issues that must be taken into consideration by developers in the design of user-friendly mobile applications in addition to the presentation of threats to validity. Finally, findings are discussed and future works are presented in “Conclusion and future work” section.

In our previous study (Idri et al. 2013), we have developed a framework in order to use the ISO 9126, particularly its external quality model, to deal with mobile environments limitations which are mainly decomposed into two subcategories: (1) mobile devices limitations such as, limited energy autonomy, limited user interface and limited storage capacity; and (2) wireless networks limitations which are as follows: frequent disconnection, lower and variable bandwidth. The framework developed is based on an analysis process which was designed to take into consideration the limitations of mobile environments. The process is applied to the six external quality characteristics: functionality, reliability, usability, efficiency, maintainability, and portability and consists of three steps as shown in Fig. 1 (Idri et al. 2013).

Table 1 shows the results of the application of the analysis process on the six characteristics of ISO 9126 external quality. We noted that:

To determine the level of experience of participants in the use of their smart phones, the following question was asked: “how long have you possessed this smart phone?” The answers range from 15 days to 3 years. Therefore, people who own their devices for a greater length of time manipulate their devices correctly, which helps them to perform tasks more quickly and reliably. Figure 3 shows the number of participants according to the period of mobile device possession: 38.71 % of participants owned their smart phones for less than 6 months and only 9.68 % of participants have their smart phones for more than 2 years.

The aim of this study is to evaluate the influence of screen size of mobile devices on the usability of apps, and to ensure that the identified issues of usability are not related to a very specific platform. To do that, the experiment was opened to users with devices under various OS platforms. Figure 4 shows the distribution of participants by platform. The Android is the most used platform with 66.67 % of participants followed by iOS with 26.67 %. The Android devices used in this study were: S4, S3, S3 Note, S3 mini, S2, S1, S young and Htc. Regarding iOS ones, they were Iphone 5/5S and Iphone 4/4S in addition to Nokia E72 and Nokia Music as Symbian devices.

The experimental evaluation was based on ISO 9241-11:1998, ISO 25062:2006. Effectiveness and efficiency will be measured and collected via video recordings of users performing tasks, in the form of the objective measures shown in Table 2. Regarding satisfaction, it is measured subjectively through questionnaires.

Also as an instrument in this experiment, the use of two questionnaires: a General Information Questionnaire and the QUIS 7.0 for satisfaction measures, this last has a good recognition in the field of user satisfaction and is used in several mobile usability evaluation studies (Hussain and Kutar 2012a). The QUIS 7.0 designed by a group of researchers in Human–Computer Interaction at the University of Maryland was used to collect the user’s opinions and to evaluate their satisfaction on different aspects of an interface on a 9-point scale (Chin et al. 1988). It includes the following components: a demographic questionnaire, the evaluation of the system satisfaction via six scales, and the measures of nine specific interface factors: screen aspects, terminology and system information, learning aspects, system capabilities, technical user guides, online help and online tutorials, multimedia, conferencing, and methods of software installation. Each interface factor has a question as a major component followed by subcomponents questions, each of which rated on a scale of 1–9, with significant positive adjectives on the right, other negative on the left and NA (not applicable). The questionnaire also contains free text boxes for each section that allows the user to list his comments and provide feedbacks (Harper et al. 1990).

First, a General Information Questionnaire has been given to users in order to describe their knowledge of both apps and their familiarization with smart phones. Figure 9 show a screenshot of a part of this questionnaire. According to users’ answers, they had divided equally into two groups. Users, who have never worked with Google Apps but have already worked with Google Maps, were assigned to Google Maps group. This assignment is independent of the level of mastery of each application, in order to have a heterogeneous group of users: novice, experienced, and expert. The same is for people who have already worked with Google Apps; they were assigned to the Google Apps group.

After the assignment to groups, users started installing apps on their devices. All devices could connect to internet through a Wi-Fi with the exception of four users of both groups who had worked with 3G in order to see the influence of the variable bandwidth limitation on the usability. The participants had asked also to think aloud during the experiment via the recorders of their devices in addition to the eye-tracking technique that was used in order to see the movement of users’ eyes when performing tasks. We are interested in the way in which they think and interact with the apps. This had allowed each user to make his thoughts and ideas audible, which really helps to understand what is going on in his mind during the use of the apps. In addition, an expert user was selected on the basis of more than 1 year’s experience of using apps with the same device as in the experiment.

Different tools were used to record videos of the users’ tasks execution, such as: @screen for Android devices, Recordable.mobi for Eye-Tracking technique (Fig. 6), Reflector tool for iOS devices that was installed on Mac laptop as shown in Fig. 7.

Following the execution of tasks, users fill up the QUIS 7.0 as shown in Fig. 10. This experiment led to four deliverables for each participant: (1) recorded video, (2) recorded voice, (3) the QUIS 7.0 filled, and (4) the data collected. Note that we have taken the necessary permission from users to reproduce the experiment images and their pictures in this paper.

This section discusses the results of the empirical validation of our framework, in particular those concerning the usability characteristic. The experiment report was based on the guidelines proposed by ISO 25062:2006 as a standard method for reporting results of usability evaluation.

Table 3 presents the objective measures (Table 2) derived from the video recordings of the execution of tasks. Table 4 presents the subjective user-satisfaction measures corresponding to the users’ answers to QUIS 7.0. The data analysis and graphs generation were conducted by means of the Statistical Package for the Social Sciences (SPSS) under a Microsoft environment. This discussion is structured to answer the two questions representing the aim of this experiment.

This section concerns results obtained by analyzing the answers of participants to the QUIS 7.0 questionnaire. Table 4 shows the mean, the median and the standard deviation of subjective measures for both apps Google Apps and Google Maps. The questionnaire answers have been classified on a scale of 9: 9 means excellent, 6–8 means very good, 4–6 means good, 2–4 means fair and 1–2 means poor.

The overall reaction to Google Apps was very good (median and std deviation are equals to 7 and 1.67 respectively) and higher than those of Google Maps (median and std deviation are equals to 5.41 and 2.15 respectively) despite the ease and the simplicity of this application. It may be due to two reasons: (1) there were two novice users who were working with Google Maps for the first time; (2) there were two users with Nokia E72 devices, which are characterized by a very small screen and a limited storage capacity, which makes the use of the different tasks very difficult and not at all obvious.

The majority of participants were satisfied with Google Apps with a very good median value of 7 of the overall reaction measure. So, they found Google Apps very challenging and impressive especially on large screen size devices with high display resolution, a large memory and a good computing power such as S3, S4, S3Note, and iPhones. Except for one participant, he could not perform the tasks with his own device Wing W2 (system Android 4.2) because of the Android version that supports neither Google Calendar nor Google Doc, so he worked with another device. The same for Google Maps, the median was good with a value of 5.4; this is due to some participants who did not like the application on their smart phones because of the screen size and low memory as it is the case for S2, S3 mini, Nokia Music and Nokia E72.

For the Screen, all participants gave at least 8 of 9 points to the overall reaction when they used S3, S4, S3 Note, iPhone 5, and iPhone 5 s which have large screens. However, as shown in Fig. 13, poor and fair values for Screen evaluation were given for Nokia E72, Nokia Music, S young, S3 mini, and S2 which screens are too small. Therefore, viewing and editing files for the case of Google Apps were stressful and difficult as you have to go in rotation mode of the screen for a better visibility and a good display. The disabled participant was satisfied with S3 Note as it has a large screen that allowed him to work easily and quickly.

Regarding Learning and technical On-line help, they had respectively median values of 6.25 and 4.5 for Google Maps against 6.75 and 6 for Google Maps. This may be explained by the non-presence of help messages and online user manuals of the apps for certain type of devices, particularly for Google Maps under Nokia devices where users had to fumble for how to accomplish the various tasks. However, these two measures have a major role for easy and optimal use in terms of time, since they correspond to learn how to operate the app with help messages on the screen and online user guides. For example, Google Apps was impossible to use under Nokia E72 devices because of the screen size and low memory. For Google Maps, it was very difficult to use it under this type of devices. Therefore, the novice users were blocked at the beginning and they took the needed time looking how to operate this app and to become familiar with it on their devices.

Figure 13 shows that participants were satisfied and were very pleased with smart phones that are quick with a large screen size and a very high resolution as S3, S4, S3 Note, iPhone 5/5 s, unlike the other types of devices that are very slow in addition to the screen size that was stressful with a poor quality information display.

Whatever the simplicity of a mobile application or the work to be done on a smartphone, all depends primarily of its characteristics. So, despite the complexity of Google Apps tasks compared to those of Google Maps which have been very easy, most users have enjoyed working with Google Apps seen the power of their devices, their speed, and also the high-quality screen resolution.

In summary, the objective measures identified by comparing to the expert data of both apps are: Time to install, Time to learn and use, Tasks time and Data entry time. Concerning the subjective measures we focused on the Overall Reaction, the Screen Evaluation and the Technical Online Help according to the median value. Therefore, all these measures depend on several factors as indicated in “Using ISO 9126 for software quality in mobile environments” section: the mobile context, the connectivity, the data entry methods, the presence of technical online help, the characteristics of devices especially the screen size, the display resolution, low memory, type of keyboard, etc.

According to the mobile users involved in this experiment, the screen size, the display resolution and the storage capacity were the main limitations of mobile devices that affect the usability of apps. Since, the higher the screen resolution, the more the user has space for playing games, reading text, viewing files and reports, taking pictures and recording videos. In addition, the low storage capacity of mobile devices affects also the ease of use of apps. This problem becomes a handicap especially for smart phones that do not have a memory card, which blocks the user and does not let him install some large size apps. However, the influence of the other mobile limitations such as the connectivity, the mobile context and the data entry methods on the usability of apps must be taken into account during the usability evaluation.

As a conclusion of this experiment, the obtained results confirm what we have found during the application of the framework on usability external metrics: the limited user interface has a strong influence on the majority of the external metrics of the usability characteristic (21 metrics of 27 metrics are influenced). Regarding limited storage capacity, it has a weak influence (2 metrics of 27 metrics) on the usability characteristic when compared to other ISO 9126 quality characteristics (ISO 9126-2 2001). These results are considered normal since usability is related to the use challenges of the software product, and these challenges depend on the difficulties encountered when the user interface is exploited.

In addition to the two hardware limitations, other issues related to the software and to the mobile context are presented: the absence of technical manuals and online help, the limited and variable bandwidth of networks in addition to complicated data entry methods. Therefore, mobile application designers and developers must make available to users easy and simple interfaces that can be used by any kind of users: novice, experienced and experts. These applications must also be equipped with user guides and online manuals.

Therefore, the evaluators of usability characteristic should take these mobile limitations into consideration by using ISO 9126 measures or by suggesting new measures for the mobile environments.

The empirical validation we have performed is limited by a number of factors (Johnson 1998b; Petrie et al. 1998). Threats related to the construct, to the internal and to the external validity, are below.

“The construct validity is a matter of judging if the treatment reflects the cause construct and the outcome provides a true picture of the effect” (Dumas and Redish 1999; Nielsen and Landauer 1993). Since the objective measures are collected through video recordings and subjective measures via questionnaires, here the data collected reflects the reality.

Internal validity is related to the validity of the study within the used environment and the reliability of obtained results. The Empirical Evaluation had performed in a controlled environment, which constitutes a threat to internal validity because users were far from any interruptions, noise. However, users have taken a little time to become familiar with the environment before starting the experiment; as a result, this threat could be minimized.

The external validity is related to the generalization of findings. The sample of the experiment was small (32 participants) which constitute a clear threat to external validity. Another limitation of this experiment is that it was based on two widely used mobile applications: Google Apps and Google Maps that were proposed by participants. Therefore, it is difficult to generalize the results for other mobile apps and mobile sites.

This paper has presented an empirical evaluation of our framework developed on the use of the software quality standard ISO 9126 in mobile environments, especially the effects of mobile limitations (limited user interface, frequent disconnection, lower bandwidth, etc.) on the usability of apps by means of ISO 25062 and ISO 9241 standards. To do this, an experiment has been conducted by giving 32 users a set of tasks to be performed on their devices and allowing them to think aloud while using both Google Maps and Google Apps. The aim was to identify and to highlight the usability issues when using apps. In this experiment, we have collected objective measures using a set of measures and by video recordings, as well as subjective measures via the QUIS 7.0 questionnaire. The results obtained were analyzed and interpreted on the basis of the expert data, the user’s descriptions, and devices’ characteristics. Hence, we identified a set of challenges when using apps related to the characteristics of the device (Hardware) such as the screen size, the display resolution and the capacity of memory which validate the findings of our framework. In addition, other issues were identified which are related to the application itself (Software) as the presence of online help and user guides, the use of simple data entry methods, etc. Thus, owning a smart phone with a large screen is a good thing because this screen is very convenient and may make everything easier to use. It may serve as an e-book reader, and it may be turned into a console to play games easily. In addition, the problems related to the software, as user guides and online help, must be made available especially to novice users in order to learn how to operate most apps.

Further research works will be initiated to carry out this experiment in the field on the basis of a large sample of users, for detection of new usability issues of apps. Thereafter, empirical evaluation should be conducted to validate the other analytical findings, concerning the reliability, efficiency, and functionality characteristics.

KM and AI developed and evaluated the framework for assessing the usability of mobile applications. AA has participated in the analysis and the interpretation of the results of this empirical study. All authors read and approved the final manuscript.

The authors declare that they have no competing interests.

We declare that this research was carried out according to our institution’s guidelines.